Key Points

- Scalable = entire digital presence analysis

- Engaging = process is engaging to stakeholders

- Exploratory = exploratory analysis rather than rigid

- Repeatable = confidence in getting similar results multiple times

Strong content analysis is SEER:

Scalable. We should be analyzing entire digital presences across multiple organizational sites rather than narrowly on specific sites or site sections.

Engaging. The process of content analysis (not just the outputs) should be conducted in a way that is engaging to a wide range of stakeholders.

Exploratory. As we dive into the analysis, we are able to answer new questions that are only uncovered as we better understand the digital presence.

Repeatable. If we repeat the same analysis multiple times (for the same digital presence or across digital presences) we get consistent results.

Scalable

Larger organizations have multiple websites drawing content from multiple source systems. Yet most content analysis is done on a much narrower slice of content. Why is analyzing at a broader scale important?

Big changes often require acting broadly.

There's often a bunch of underperforming content that could be archived or deleted.

Searching for duplicate (or near-duplicate) content.

Most organizations do not understand the entirety of their digital presence.

To do scalable content analysis, we need to be able to:

Represent content across multiple sites and multiple source systems in a consistent manner (note: it's not scalable to solely have a tab in a spreadsheet that lists each site as a row with summary information about it, and the actual item-by-item analysis in separate tabs, each with its own way of presenting it's data).

Analyze at different levels. For instance, it should be easy to switch views between a specific site and across the digital presence.

In Content Chimera, content from all sources is rationalized to a consistent content database, and you can change levels of the analysis:

Engaging

Our content analysis cannot be a complete black box, requiring Excel wizards or those with the fortitude to do line-be-analysis to engage with it. Instead, we need to:

Show the patterns of content, most notably with charts like the one above.

Have live charts so we can explore the content directly with stakeholders (rather than just static charts).

Discuss rules about the content, so people can discuss broad principles rather than just staring at particular pieces of content.

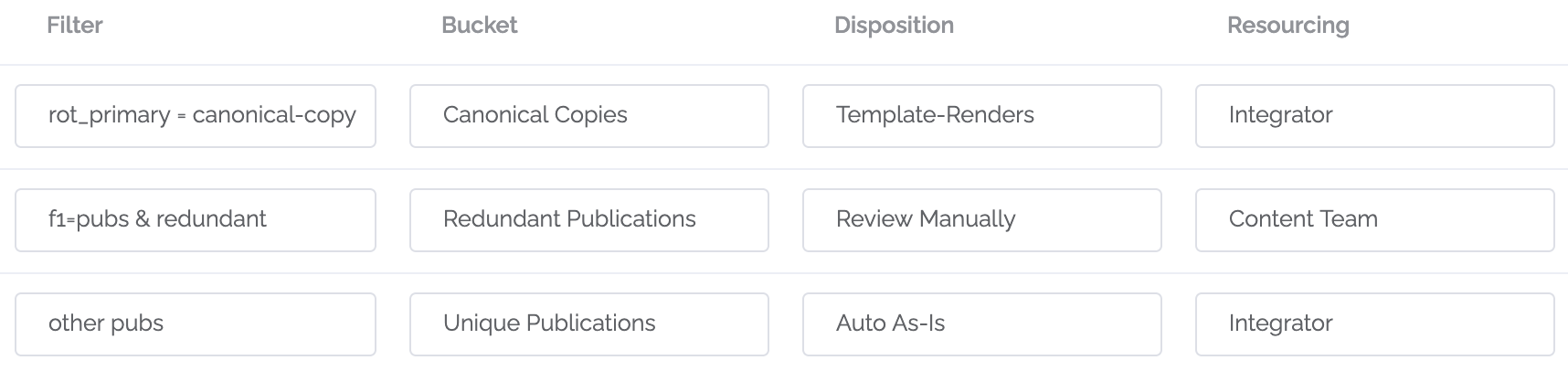

In Content Chimera, you can define rules about your content and then see the implications of those rules. In the example below, we are looking at a tentative set of rules which we can then run to see what content is affected.

Exploratory

We may go into a content analysis with some questions we would like to answer about the content, sometimes vague and sometimes specific. We should start the analysis with these initial questions, but nonetheless we need to approach this as an exploration:

We probably don't know exactly how we will answer the question when we start. We may have particular data sources in mind, but we probably don't know how we'll utilize that data (or if the data is very good).

We probably don't even know the questions before we dive in (or we may not yet have precise questions).

The most obvious way of answering the question may be cost-prohibitive, so it would be helpful to inch toward the problem and iterate, rather than dive in with the obvious-but-really-difficult approach.

To pull this off, we need:

Speed. If we're going to iterate, it needs to happen quickly. We can't be handing off spreadsheets to tons of people to go through line-by-line again since we want to try out a new way of defining success midway through our analysis.

Ability to bring in arbitrary data. As we proceed, we need to add new data as we go. Not only do we not know all the data sources we'll need at the beginning, but throwing the kitchen sink at the problem at the beginning (adding all the data we can lay our hands on indiscriminately) is inefficient.

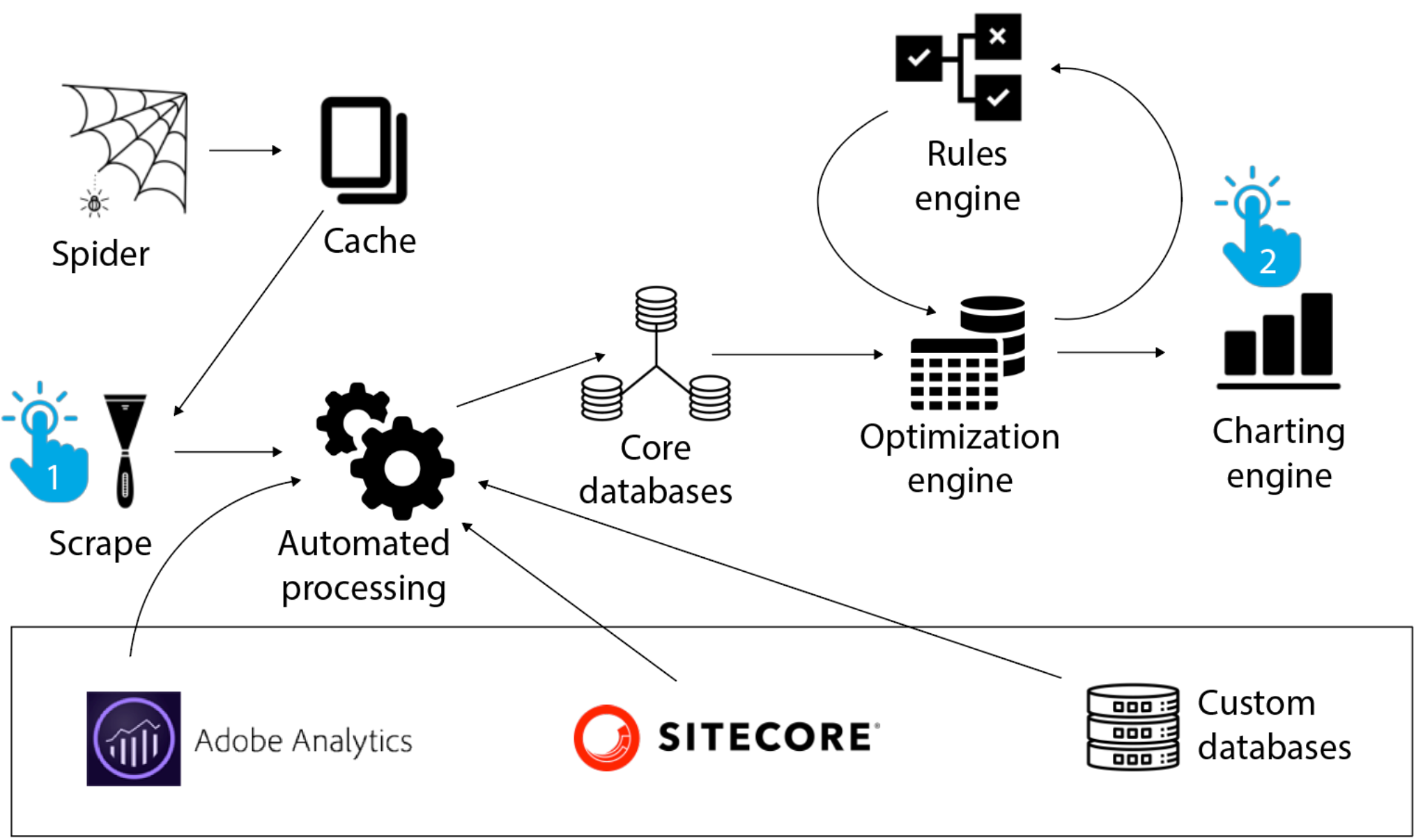

Below is a simplified view of a David Hobbs Consulting project with an international organization spanning multiple websites. In Content Chimera, we were able to bring in data from multiple sources and scraping, as well as a variety of rules to help rationalize all the data from multiple systems. From the exploration perspective, let's say we need to change how we scrape out some information (or scrape out something new). In Content Chimera we can define a new pattern and scrape it. Then we simply go to the charting tool and see the new information graphed along with all the existing data.

Repeatable

We need a repeatable process. Although content analysis can be messy and we can't necessarily be 100% repeatable (especially with crawling), we need to:

Have confidence in our analysis.

Be consistent. If you own a digital presence, then the analysis between one iteration of your analysis to the next should get similar results. If you provide content analysis for multiple clients, then the processing should be consistent between clients. If you have multiple analysts, then it should be consistent between analysts.

It goes without saying that it's really tough to be consistent with a spreadsheet tool. Copying and pasting values or formulas is error prone, and this gets multiplied with more and more steps that you have to take.

To be repeatable, we need:

A library of processing steps.

Good defaults.

A consistent configuration (for instance, how crawls occur).

Low manual intervention needs.

The ability to change, tweak, or add one thing such that change is merged and coherent with all the other analysis.

SEER or SNORe?

The opposite of SEER is SNORe:

Static. We agree what the analysis is going to be up front, and we statically deliver that analysis.

Narrow. Since we don't have a better way, we deliver a narrow analysis such as analysis on just part of a site.

One-off. We approach each analysis as a one-off process where we copy and paste from a previous analysis or the analyst does it whatever way they've done it in the past.

Repetitive. Instead of looking at patterns, we slog through line by line in the analysis.

ew. Do we really want this?